MindSpace3D: 3D Object Reconstruction from Brain Activity (EEG)

Last page edit: December 17, 2025.

Recent advances in visual decoding have demonstrated the feasibility of classifying and reconstructing perceived images from evoked brain activity (check our work on reconstruction of 20 image classes from the visually evoked brain activity measured by a portable, 8 channel EEG here).

However, human perception is inherently shaped by the ability to process three-dimensional (3D) visual information, a fundamental yet underexplored aspect in neuroimaging research. In this work, we introduce MindSpace3D, the first EEG dataset recorded in a virtual reality (VR) environment, designed to investigate the neural mechanisms underlying 3D visual perception. MindSpace3D captures 64-channel EEG responses from subjects viewing 3D objects across six distinct categories, presented as rotating video sequences, facilitating an investigation into the neural mechanisms underlying stereoscopic depth processing and object recognition.

In the first study we make three primary contributions to neural decoding and bio-inspired 3D vision:

(1) We demonstrate the first dual-stream architecture for 3D brain decoding that uses separate modules for object identity (viewpoint‑tolerant “what”) and spatial transformation (viewpoint‑dependent “where/how”) processing, achieving robust performance on both classification (up to 68% accuracy) and angular regression (10-11° MAE);

2) We provide interpretable evidence for dynamic dorsal–ventral–motor involvement in EEG through Grad-CAM, showing that successful decoders avoid simple ventral dominance and instead rely on early motor engagement with time‑varying dorsal-ventral contributions;

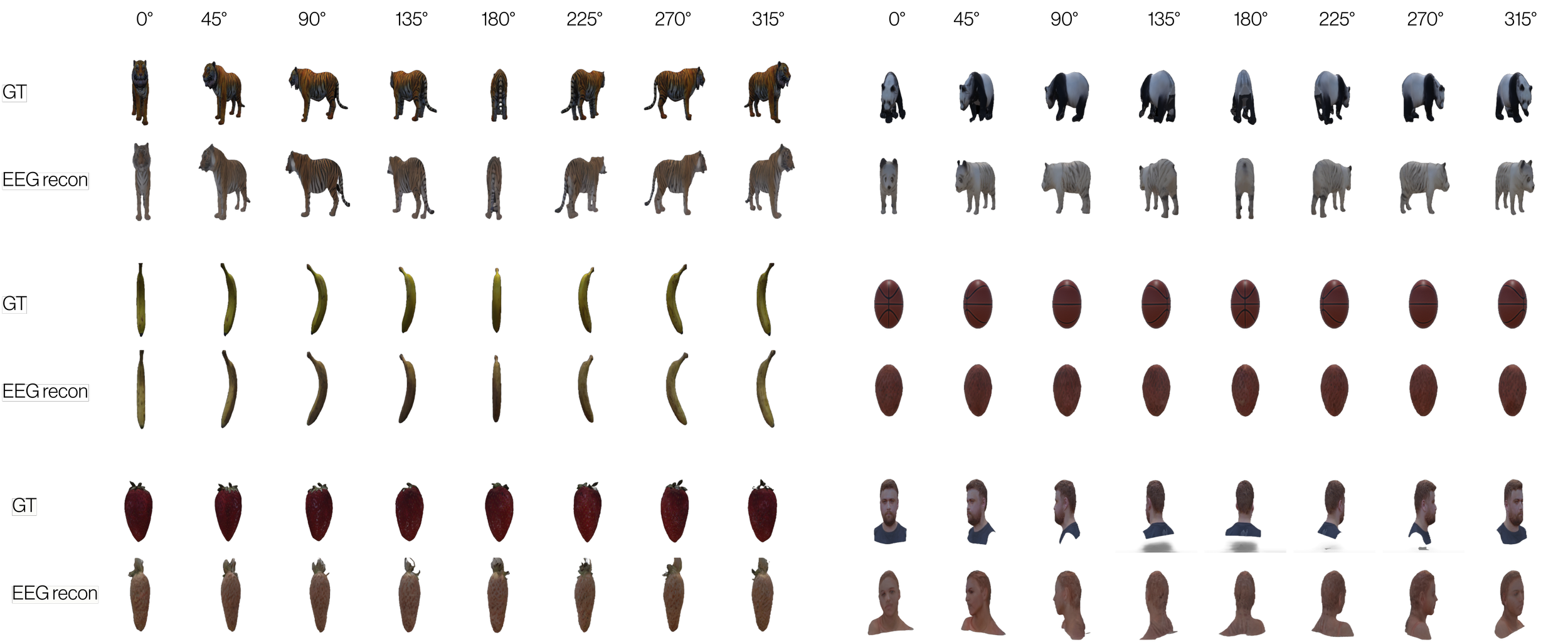

(3) We establish the feasibility of EEG-conditioned 3D reconstruction through multiview diffusion, enabling direct generation of 3D objects from neural signals. Further investigation of geometric priors through comparison with steerable CNNs and equivariant architectures would quantify the benefits of biological versus purely geometric inductive biases for 3D brain decoding. Quantitative evaluation through single-view versus multiview reconstruction metrics and analysis of rotational equivariance properties would further validate the geometric understanding captured by our dual-stream approach.

Multi-view test set reconstructions across 6 categories (ground truth: top, generated: bottom). Each shows 8 viewpoints (0°–315°).

Proposed dual‑stream architecture for 3D brain decoding from EEG.